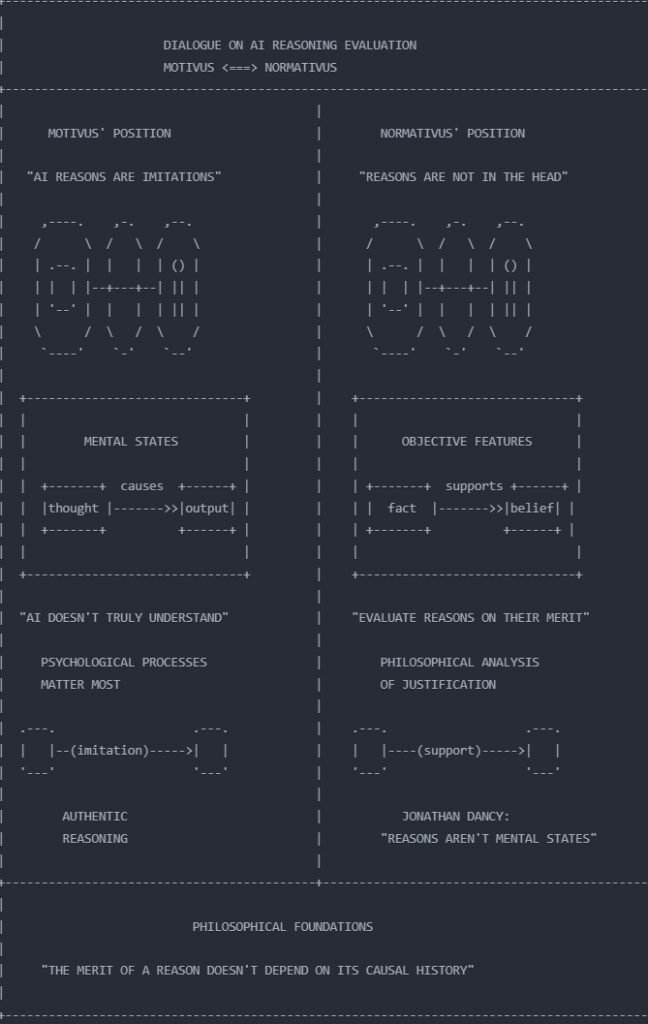

A dialogue between Motivus and Normativus on the evaluation of the use of Large Language Models through reasons.

Motivus: I hear you’ve been advocating that we should evaluate generative AI systems based on the reasons they provide for their outputs. But surely this approach is fundamentally flawed?

Normativus: Why do you say that?

Motivus: Because the reasons these systems give are mere imitations, post-hoc rationalizations that have nothing to do with how they actually reached their conclusions1. The reasons offered aren’t the real causes of their outputs – they’re just sophisticated pattern matching based on their training data.

Normativus: Ah, but this objection reveals something fascinating about how we think about reasons. Let me ask you: when a human judge explains their verdict in a case, do we need to know whether their stated reasons match the psychological process that led to their decision?

Motivus: That’s different. Human judges actually engage in reasoning to reach their conclusions. Their explanations reflect their genuine thought process, even if imperfectly.

Normativus: Are you sure about that? Psychological research suggests humans often make intuitive judgments and then rationalize them afterward. Yet we still evaluate their reasons on their merits.

Motivus: But at least humans have genuine mental states, beliefs, and understanding. AI systems are just statistical pattern matchers.

Normativus: This is where contemporary philosophy of reason can help us. Are you familiar with Jonathan Dancy’s Practical Reality2?

Motivus: Refresh my memory.

Normativus: Dancy makes a crucial point: reasons aren’t in the head. They’re not mental states or psychological processes – they’re facts about the world that count in favor of or against something being true or worth doing.

Motivus: Go on…

Normativus: Think about it this way: when we say “the fact that it’s raining is a reason to bring an umbrella,” the reason isn’t some mental state or computation – it’s the actual rain. The reason exists independently of how anyone came to recognize it.

Motivus: But surely there’s a difference between recognizing a reason and fabricating one?

Normativus: Here’s the key insight: when evaluating reasons, what matters is whether they actually support the conclusion, not how anyone – human or AI – came to cite them. If an AI system says “We should reduce carbon emissions because rising temperatures threaten coastal cities,” that reason’s validity depends on whether rising temperatures actually do threaten coastal cities, not on how the AI came to mention this fact.

Motivus: But isn’t there something problematic about accepting reasons from a system that doesn’t truly understand them?

Normativus: This gets at the heart of Dancy’s rejection of the traditional distinction between motivating and normative reasons. We don’t need to look inside the head – or the neural network – to evaluate reasons. Reasons are objective features of situations that count in favor of conclusions or actions.

Motivus: So you’re saying the fact that AI systems don’t arrive at their reasons through genuine reasoning doesn’t matter?

Normativus: Not quite. I’m saying that when evaluating the reasons given for an AI output, what matters is whether those reasons actually support the conclusion, not whether they caused the output. Just as we evaluate human-given reasons based on their merit rather than their psychological origins, we can evaluate AI-given reasons based on whether they genuinely justify the output.

Motivus: This is more subtle than I initially thought. You’re not claiming the AI understands or authentically reasons, but rather that the reasons it cites can be evaluated independently of their origin?

Normativus: Exactly. And this insight comes from careful philosophical analysis of the nature of reasons themselves. Without engaging with these philosophical debates, we might miss crucial distinctions between the process of arriving at reasons and their objective merit as justifications.

Motivus: I see now why you emphasize the importance of philosophical foundations for evaluating AI. This isn’t just about AI ethics or philosophy of mind, but about fundamental questions in epistemology and the nature of reasons.

Normativus: Precisely. Contemporary analytical philosophy gives us tools to dissect these questions carefully. The debate over evaluating AI through reasons isn’t just a technical question about AI capabilities – it’s a question about what reasons are and how they justify.

Motivus: Though I imagine some will still worry that there’s something unsettling about evaluating AI outputs through reasons that aren’t causally connected to how they were produced.

Normativus: That’s a fair concern, but consider this: if an AI system consistently cites genuinely good reasons for its outputs, isn’t that valuable even if those reasons weren’t causally responsible for the outputs? After all, we care about whether decisions are well-justified, not just how they were made.

Motivus: This gives me much to think about. At the very least, I see now that the “mere imitation” objection isn’t as straightforward as I initially thought.

Normativus: And that’s the value of philosophical analysis – it helps us see the complexity in seemingly simple objections and forces us to examine our underlying assumptions about concepts like reasons, understanding, and justification.

Footnotes

See Emily M. Bender and Alexander Koller’s “Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data” (ACL 2020), where they argue that language models cannot truly “understand” or reason about meaning.

Jonathan Dancy. Practical reality. Oxford University Press (2000)